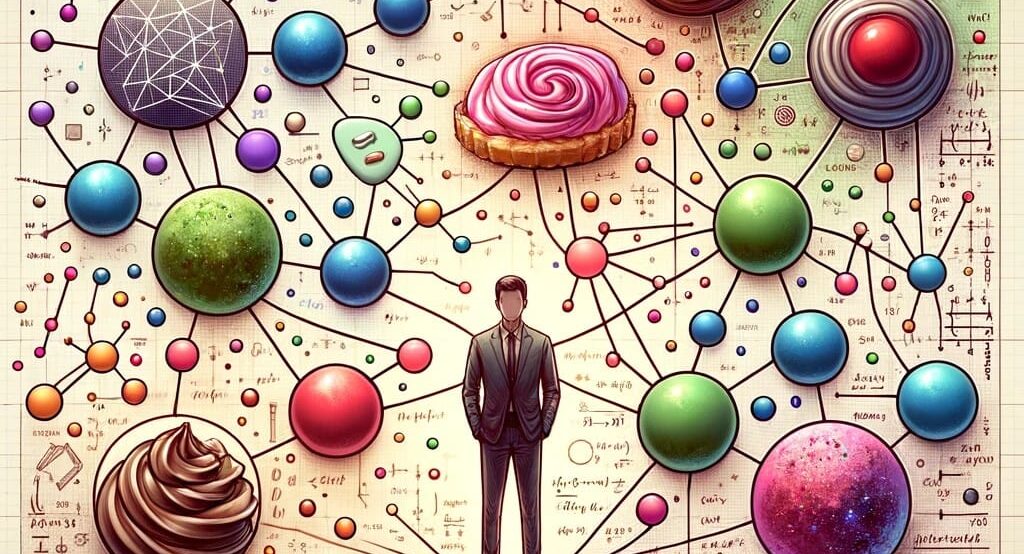

Understanding Contextual Decision-Making in AI through Vector and Graph Models

In the realm of artificial intelligence (AI), understanding human behavior and decision-making processes has always been a fascinating challenge. A simple yet profound scenario—a person with a sweet tooth craving a specific sweet from a particular shop, only to find it unavailable—offers a perfect illustration of the complexity AI models face in mimicking human decision-making. This individual’s choices, which may vary from: – avoiding the sweet altogether, – selecting an alternative sweet from the same shop, or – seeking the desired sweet at another shop highlight the nuanced and context-dependent nature of human decisions. So , let’s dive in the very challenge posed while making contextual Decision-Making The key to replicating this decision-making process in AI lies in the ability to model not just the decisions themselves, but the context and factors influencing these decisions. Contextual decision-making in AI is about understanding the ‘why’ behind each choice and the factors that alter this decision in different directions. It requires a model that can weigh various factors, such as urgency, preference, availability, and past experiences, to predict or decide in a manner that aligns with human behavior. Welcome! Vector Models – best suited for understanding preferences and similarities. Vector models, particularly those based on embedding techniques like Word2Vec or GloVe, offer a way to represent entities (such as sweets, shops, or preferences) in a multi-dimensional space. In this space, the distance and direction between vectors can signify similarity or preference. For example, different sweets can be represented as vectors in this space, and a person’s preference can be modeled as a vector pointing towards their favored sweet. The absence of the desired sweet at the preferred shop can be represented as a vectorial deviation, and the AI’s task is to find the vector (decision) that realigns with the person’s preference vector as closely as possible under the given context. Vector Models fight for their superiority against Graph Models used for mapping relationships and influencing factors. Graph models excel at representing complex relationships and dependencies between entities and decisions. In our scenario, a directed graph could model entities (people, sweets, shops) as nodes and the relationships or decisions (preferences, availability, choices) as edges, with weights representing the strength or importance of these relationships. Factors like urgency and importance can dynamically adjust these weights, influencing the decision path the model predicts or recommends. For instance, a graph model can represent the decision-making process as a pathfinding problem. Nodes represent the person, potential sweets, and shops, while edges represent the possible decisions (e.g., choosing a different sweet, going to another shop). The weights on the edges could be influenced by factors such as urgency (how badly does the person want the sweet?) or convenience (how easy is it to go to another shop?), thus guiding the AI in predicting the most likely decision under the given circumstances. Let’s add the spice called Contextual Awareness to this recipe for making it AI driven. To effectively mimic human decision-making, AI models need to integrate context awareness, understanding that the same person might make different decisions under different circumstances. This involves dynamically adjusting the model’s parameters (vector directions, graph edge weights) based on the current context, past behaviors, and the relative importance of various factors including personas. I’ll try to conclude this interesting topic by just highlighting the underlying mathematical foundations which exist for decades if not centuries. The mathematical models underpinning these AI decision-making processes involve complex algorithms for vector space manipulation and graph theory. Techniques such as cosine similarity for vector models or Dijkstra’s algorithms for graph models can be used to evaluate decision options and predict outcomes. Of course fine-tuning these is nothing less than a perfect blend of art and science where human and machine intelligence marry each other to produce a wonderful product. So , in conclusion , the sweet shop dilemma underscores the importance of context and variability in human decision-making. By leveraging vector and graph models, AI can better understand and predict human behavior, offering insights not just for simple decisions but for complex, context-driven choices that define our everyday lives. As AI continues to evolve, the ability to accurately model and respond to the nuanced preferences of individuals will be crucial for developing more intuitive, human-like AI systems and one day in the distant future the walls will diminish. Happy Reading!! Author – Sumit Rajwade, Co-founder: mPrompto

Why Every Lost Customer is a Lesson Learned with Gen AI

On my first day consulting for a D2C startup, I found myself in their brightly lit office alongside the founder, both of us fixated on the significant dip we were observing from ‘Add to Cart’ to ‘Purchase’. For a moment, I was transported back to my school days, learning about the Mariana Trench, and couldn’t help but draw parallels between that deep abyss and the drop in sales we were witnessing. Despite the founder’s wish to simply will it away, this “Trench” had been deepening over the last six months, and he was at a loss about what was causing it. I half-joked to him that despite my extensive experience in digital marketing, I lacked the ability to read minds, eliciting a half-smile in response. I then suggested we have his team call around 500 customers who had abandoned their carts. What we learned from this exercise was both profound and fundamentally simple, leading to such a significant change that our monthly targets began to be met within just two weeks. (Connect with me one-on-one to learn more!)That got me thinking that in the ever-so-busy world of e-commerce, much of our attention is towards the customers we manage to bring onboard. We scrutinize their Customer Acquisition Cost (CAC), their Lifetime Value (LTV), and how they came to us, Attribution. But there’s a crucial piece of the puzzle that often gets overlooked: The Customers We Lose Along The Way.Imagine you’re a customer shopping online, adding items to their cart but then, for some reason, they decide not to buy. Or maybe they make a purchase once but never return to buy again. These scenarios are more common than you might think and they represent a goldmine of insights for brands that are often left unexplored. The Missed Opportunity in Understanding ‘Why’ Most companies are good at tracking the ‘what’ – like how many customers they’ve lost or what their churn rate is. But understanding the ‘why’ – why a customer decided not to buy or why they didn’t come back – is where the real treasure lies. This is where Generative AI comes into play and where we, at mPrompto, are making strides. The Generative AI Difference By leveraging Generative AI during the customer onboarding process, we at mPrompto have developed a unique approach to not only understand what a customer wants but also why they want it. This insight allows brands to tailor their communication more effectively and gain a deeper understanding of their customers’ needs and motivations. The Benefits Unlocked for Brands Improved Customer Service: Understanding the specific reasons behind a customer’s dissatisfaction enables you to address these issues directly, leading to better customer experiences. Reduced Churn: By proactively addressing concerns and improving the customer experience, you’re more likely to retain customers. Price Elasticity Check: Insights into why customers might be sensitive to price changes allow for more strategic pricing decisions. Product Improvement: Direct feedback on products helps in making necessary adjustments and innovations. Untapped Customer Insights: Learning from lost customers can reveal new market opportunities and customer needs that haven’t been met. Marketing Teams as Custodians of Customer Relationships By focusing on the ‘why’ behind customer behaviors, marketing teams can transcend their traditional roles. They become guardians of the customer experience, armed with insights that can drive the company forward in more customer-centric directions. The Bottom Line Every customer lost is indeed an insight gained. With the advent of Generative AI, the e-commerce landscape is poised for a transformation. By understanding not just what our customers do but why they do it, we open the door to unprecedented growth and connection. In the end, it’s not just about recovering lost customers but about learning from them to prevent future losses. This shift in focus from the ‘what’ to the ‘why’ is not just a change in strategy but a revolution in understanding customer relationships. And with Generative AI, we’re just getting started. Author – Ketan Kasabe, Co-founder: mPrompto

Sherlock Holmes of Generative AI

Sherlock Holmes meets a stranger. Within moments, he begins his deductions, speaking with confidence and precision. “You’re a surgeon,” he declares, noting the gentleman’s precise yet calloused hands, a tell-tale sign of frequent surgeries. He observes a faint stain of iodine on the man’s shirt cuff, common in medical settings, and spots a ticket stub for a medical lecture poking out of his pocket. Holmes continues, “Recently returned from abroad.” The sun tan, uneven, indicates the man had been to a sunny region and wore a hat most of the time, suggesting not a holiday but possibly working under the sun. The type of hat? A pith helmet, typical of those worn in tropical regions by Europeans at the time. Just as Sherlock Holmes unravels the intricate details of a stranger’s life with his sharp observations and deductions, Generative AI, through its advanced algorithms, embarks on a similar journey of piecing together information to generate insightful responses. This process, often referred to as the “Chain of Thought,” mirrors Holmes’ methodical approach. In the world of AI, this involves the model sifting through vast amounts of data, identifying relevant patterns, and logically connecting them to address complex queries. When Holmes observes the surgeon’s calloused hands or the uneven suntan, he’s not just seeing isolated facts; he’s linking them to a broader narrative. Similarly, when tasked with a question, an LLM (Large Language Models) doesn’t merely spit out pre-programmed responses. Instead, it navigates through a multitude of data points, much like stepping stones, to reach a conclusion. Each step in this process is akin to Holmes deducing the surgeon’s profession or his recent journey abroad – a calculated, sequential progression towards understanding. Thus, the LLM’s ‘thinking’ – its ability to process and connect information in a coherent, step-by-step manner – is not unlike the legendary detective’s famed deductive reasoning, offering a glimpse into how artificial intelligence can mimic sophisticated human thought processes. The Chain of Thought capability in AI opens a treasure trove of opportunities for businesses and individuals seeking innovation and efficiency. For businesses, this feature can be a game-changer in areas like customer service, where AI can not only respond to queries but also anticipate and address underlying concerns, leading to a more intuitive and satisfying customer experience. In decision-making, executives can use AI to simulate various scenarios and outcomes, providing a detailed analysis of each step in the decision chain, thereby enhancing strategic planning with data-driven insights. For creatives, this aspect of AI can inspire new directions in projects, offering a fresh perspective by logically connecting disparate ideas. On an individual level, AI’s Chain of Thought can aid in personal development and learning, tailoring educational content based on the individual’s learning style and progression. It can also assist in daily tasks, like budgeting or scheduling, by understanding patterns in behavior and preferences, thus offering optimized, personalized solutions. By harnessing this sophisticated aspect of AI, both businesses and individuals can not only streamline operations but also foster a culture of innovation, making strides towards a more efficient, data-informed future. Generative AI, empowered by its chain of reasoning, is making significant strides in solving complex problems across diverse sectors. In healthcare, AI models are revolutionizing diagnostic processes. For instance, AI systems can analyze medical images, using a series of logical steps to identify patterns indicative of diseases like cancer, often with greater accuracy and speed than human specialists [1]. This method of reasoning not only improves diagnosis but also helps in personalizing treatment plans based on a patient’s unique medical history. In finance, AI is used for risk assessment and fraud detection. By logically analyzing spending patterns and account behavior, AI can flag anomalies that might indicate fraudulent activities, thereby enhancing security and efficiency in financial transactions. In the creative industries, AI’s chain of reasoning is being harnessed for tasks like scriptwriting and music composition. By understanding and connecting various elements of storytelling or music theory, AI can assist artists in generating novel and intricate works, opening new avenues for creativity. These examples underscore how AI’s advanced reasoning capabilities are not just automating tasks but are also providing deeper insights and innovations, thereby transforming the landscape of these fields. I invite you to embark on a detective journey akin to Sherlock Holmes’ adventures by engaging with a LLM like ChatGPT. Request it to reveal its ‘Chain of Thought’ while responding to your queries or crafting an email. This experience will allow you to observe the intricacies of how Generative AI models ‘think’ and piece together information, much like Holmes unravels a mystery. If this glimpse into the AI’s deductive prowess intrigues you, do share your experience with a friend. For any inquiries or discussions about the fascinating world of Generative AI and its ‘Sherlockian’ reasoning abilities, feel free to reach out. I am always keen to investigate further into these modern-day mysteries with curious minds. Author – Ketan Kasabe, Co-founder: mPrompto Reference: [1] https://openmedscience.com/revolutionising-medical-imaging-with-ai-and-big-data-analytics/#:~:text=AI%20and%20Deep%20Learning%20for%20Medical%20Image%20Analysis,-Artificial%20intelligence%20(AI&text=AI%20algorithms%20can%20detect%20early,function%20and%20diagnose%20heart%20disease.

Smart Travel using AI and ChatGPT – OTOAI Convention 2023 , Kenya

As a presenter invited to The Outbound Tour Operators Association of India (OTOAI) – 2023 Convention in Kenya I tried to shed light on the transformative power of data and the pivotal role of AI in the tourism industry. Showcasing travel inspiration demo from mPrompto , I emphasized on the significance of AI, particularly ChatGPT, in revolutionizing the travel planning process. You may find the presentation interesting as it delves into the world of AI, explaining the concepts of AI, ML, and Deep Learning, and highlights the capabilities of ChatGPT. It also addresses the need for controlling generative AI and the myths and realities associated with it. The presentation post further discusses the technique of Chain of Thought, which enables AI language models to emulate human-like thinking in problem-solving scenarios. I tried to explain facets of ChatGPT, such as embedding, prompt engineering, RAG, RLHF and fine-tuning, showcasing how these techniques enhance the model’s performance in understanding and generating travel-related content. The convention’s focus on leveraging AI and ChatGPT underscores their potential in shaping the future of smart tourism.. You can download the document from here Happy reading !! Author – Sumit Rajwade, Co-founder: mPrompto

Role of Subject Matter Experts in B2B Generative AI Applications

This blog is an attempt to highlight the ever-evolving landscape of generative AI in the business-to-business (B2B) sector and the crucial role of humans will be playing to make it relevant. I still remember when I worked @ Rediff , I used to see great editors will give the apt title to the story ( only after verifying it from 3 independent sources ) and highlight what “matters” to make it interesting to read. The otherwise standard ANI or PTI stories were – boring. Drawing correlation , I can see AI driven response mirrors a time-tested practice in the news industry , where an expert editor crafts a story’s impact through a well-chosen title and slug. In this same manner , when deploying generative AI applications like ChatGPT in the B2B space , the focus shifts to editing and templatizing responses, ensuring relevance and accuracy – only to be done by “responsible” humans. This approach is essential because, while AI can generate vast amounts of content , it lacks the understanding of a subject matter expert (SME). An SME is not just any human in the loop but they are the responsible humans in the loop. This distinction is crucial because an SME brings depth of knowledge and context-specific insight that a general overseer cannot. In the context of generative AI , such as the GPT models , this expertise becomes invaluable in addressing one of the model’s notable weaknesses : hallucinations , or the generation of plausible but incorrect or nonsensical information which can lead to wrong path. Having an SME as part of the process is more than a quality check. It is about understanding the nuances of the industry , the specific needs of the business , and the balance between finesse of the language and accuracy of the information that AI alone might miss. This level of involvement ensures that the responses generated by AI are not just accurate but also relevant and tailored to the specific context of the B2B environment. Furthermore , incorporating SMEs into the AI loop acts as a form of Reinforcement Learning from Human Feedback (RLHF). This methodology allows for the continuous improvement of AI models based on these “responsible” human input. By identifying and correcting errors or shortcomings in AI-generated content , SMEs highlight areas for future work and development in these models. Their insights help in fine-tuning the AI’s output , making it more reliable and effective for B2B applications and truly putting Human + Computer blended Interaction in effect. To conclude , while generative AI holds immense potential for the B2B sector , its successful deployment hinges on the integration of SMEs into the process. They bring a level of scrutiny , understanding and context that goes beyond what AI can achieve alone. By acting as a bridge between AI capabilities and real-world knowledge , SMEs ensure that the technology is not just a tool for generating content, but a reliable partner in the B2B narrative. However building too much reliance on well trained AI model resulting into SMEs stop thinking out of the box will hamper the creativity while creations will continue – and time will tell if the danger is real , if the machine will start being creative and if humans will evolve !! Author – Sumit Rajwade, Co-founder: mPrompto

Friends like Horses and Helicopters

The other day, I was watching my daughter learn about word associations by my wife, Aditi. Aditi was explaining the concept of “friends of letters,” where each letter is associated with words that start with it, much like “H” has friends in “horse” and “helicopter.” This got me thinking about the world of generative AI, a field I find myself deeply immersed in. Just like how my daughter is taught to associate letters with words, generative AI operates on a similar principle, predicting the next word or phrase in a sequence based on associations and patterns it has learned from vast datasets. Now, the analogy of letter friends is a great starting point to help someone grasp the basics of how generative AI works. However, it’s a bit simplistic when we consider the full complexity of these models. Generative AI doesn’t just consider the “friends” or associations of a word. It also takes into account the context of the entire sentence, paragraph, or even document to generate text that is coherent and contextually relevant. It’s like taking the concept of word association to a whole new level. So, when the AI sees the word “beach,” it doesn’t just think of “sand,” “waves,” and “sun” as its friends. It also considers the entire context of the sentence or paragraph to understand the specific relationship between “beach” and its friends in that situation. Let’s take a moment to delve deeper into how a Large Language Model (LLM) like GPT-3 works. When you input a phrase or sentence, the model processes each word and its relation to the others in the sequence. It analyzes the patterns and associations it has learned from the vast amounts of text in its training data. Then, using complex algorithms, it predicts what the next word or phrase should be. It’s a bit like a super-advanced version of predictive text on your phone, but on steroids. But here’s where it gets tricky. These models are, at the end of the day, making educated guesses, and sometimes, those guesses can be off the mark. We’ve all had our share of amusing auto-correct fails, haven’t we? Let me try to explain it with an example in a B2B scenario, particularly in drafting emails or reports, which is a common task in the business world. Imagine you’re a sales professional at a B2B company and you’re tasked with writing a tailored business proposal to a potential client. You start by typing the opening line of the proposal into a text editor that’s integrated with an LLM like GPT-3. As you begin typing “Our innovative solutions are designed to…” the LLM predicts and suggests completing the sentence with “…meet your company’s specific needs and enhance operational efficiency.” The model has learned from a myriad of business documents that such phrases are commonly associated with the words “innovative solutions” in a sales context. However, the trickiness comes into play when the LLM might not have enough industry-specific knowledge about the potential client’s niche market or the technical specifics of your product. If you just accept the LLM’s suggestions without customization, the result might be a generic statement that doesn’t resonate with the client’s unique challenges or objectives. For instance, if the client is in the renewable energy sector and your product is a software that optimizes energy storage, the LLM might not automatically include the relevant jargon or address industry-specific pain points unless it has been fine-tuned on such data. So, while the LLM can give you a head start, you need to guide it by adding specifics: “Our innovative solutions are designed to optimize energy storage and distribution, allowing your renewable energy company to maximize ROI and reduce waste.” Here, the bolded text reflects the necessary B2B customization that an educated guess from an LLM might not get right on its own. This illustrates the importance of human oversight in using LLMs for B2B applications. While these models can enhance productivity and efficiency, they still require human expertise to ensure that the output meets the high accuracy and relevancy standards expected in the business-to-business arena. My next blog will cover just that and help you understand how a ‘Responsible Human in the Loop’ makes all the difference for an organization while working with some use cases of generative AI. I’m really looking forward to diving deeper into the realm of human dependency in using Generative AI my subsequent blog post, where we’ll explore how they add a layer of safety and relevance to the responses generated by LLMs. So, stay tuned! If you think you have understood Generative AI better with this blog, do drop me a line. I always look forward to a healthy conversation around Generative AI. Remember, the world of AI is constantly evolving, and there’s always something new and exciting just around the corner. Let’s explore it together! Author – Ketan Kasabe, Co-founder: mPrompto

“Knowledge is power.” – Sir Francis Bacon

The year was 1597. The cobbled streets of London bustled with energy as scholars and thinkers gathered at the renowned Gresham College. Amidst the hum of scholarly debates and the rustling of parchment, a distinctive voice rang out, drawing the crowd’s attention. Sir Francis Bacon, with his intense gaze and commanding presence, stood addressing the audience. “Knowledge,” he proclaimed, “is power.” The murmurs grew louder, with many nodding in agreement, while others exchanged quizzical glances. A young scholar nearby scribbled the words into his notebook, not realizing he was jotting down a phrase that would resonate through the ages. Little did the audience know that this statement would not only define an era but also become a driving force behind humanity’s relentless pursuit of democratization. From the Gutenberg press to the dawn of artificial intelligence, this ethos has been at the heart of every revolution. In every age, there emerges a force that turns barriers into bridges, empowering each soul to partake in the shared tapestry of human progress. Let me take you through this journey of democratization of various aspects of our being Democratization of Knowledge – The Gutenberg Effect In the mid-15th century, a revolutionary invention emerged that would change the trajectory of human history – the printing press, introduced by Johannes Gutenberg. This wasn’t just a machine; it was a beacon of change. Before its advent, books were painstakingly copied by hand, often by monks, making them rare and costly. This scarcity meant that knowledge was the privilege of the elite few. With the printing press, books became more accessible. The Gutenberg Bible, one of the earliest major books printed, signaled a new era where ideas could be mass-produced and disseminated. Knowledge was no longer chained to the shelves of the affluent; it started reaching the common man, leading to an era of enlightenment, scientific discoveries, and cultural revolutions. Democratization of Entertainment – From Theatres to Television Before television became a household staple, grand theaters were the entertainment hubs. Theaters offered live performances but were exclusive, with limited seats and high prices. Then, the transformative invention, television, arrived. It promised widespread accessibility, breaking the theater’s exclusivity. Families gathered around their TV sets for diverse shows and news. Television bridged socioeconomic gaps, democratizing entertainment. It became a powerful medium shaping cultures and societies, making global events local and local events global. Television did more than entertain; it became a universal window to the world, ensuring everyone, regardless of background, had a front-row seat. Democratization of Access to Any Part of the World through The Digital Age and the Internet The late 20th century saw the groundbreaking invention of the internet, transforming how we access and share information. In the pre-digital age, gaining insights about distant places was cumbersome, relying on books, travel, or word of mouth. Knowledge had gatekeepers like limited libraries and selective institutions. The internet changed this by offering unrestricted access. The World Wide Web connected people worldwide. A farmer in India could learn modern farming from a tutorial, and a student in Brazil could collaborate with peers in Japan. This global reach had profound impacts. Businesses entered global markets through e-commerce, artists reached a worldwide audience, and educators taught students across borders. In essence, the internet created a global village, democratizing experiences. No matter where you were, the world was just a click away. Democratization of Entertaining with Content Creation – The Rise of Platforms The entertainment industry, long controlled by established media houses, saw a major shift in the 21st century with the rise of digital platforms like YouTube. Anyone with a camera and creativity could become a content creator, leading to the emergence of YouTubers and democratizing content creation. SoundCloud did the same for musicians, enabling them to bypass record labels. App ecosystems like the App Store revolutionized software development, allowing indie developers to reach millions. These platforms not only changed content creation but also community building. Patreon allowed fans to support creators financially. This wave of democratization gave diverse voices a platform, enriching the global entertainment landscape. In addition TikTok, my ex-employer, was a game-changer which brought entertainers closer to their audience. It shifted the perception of entertainers from distant figures to relatable friends, further democratizing entertainment. In essence, 21st-century digital platforms transformed entertainment, making it community-driven and marking a democratic era. The Latest Frontier – Democratization of Quantitative Intelligence Quantitative intelligence, in its essence, pertains to the ability to handle and process numerical and logical information. It’s the capability that enables one to solve mathematical problems, analyze data, recognize patterns, and make logical deductions. This form of intelligence is integral in fields ranging from engineering and finance to data analytics and research. As we delve deeper into the 21st century, we are witnessing an era where technology, especially generative AI, is playing a pivotal role in amplifying this quantitative intelligence. Unlike traditional software, which operates based on explicit programming, generative AI learns from data and can generate new data or insights. This means it can create content, designs, and even music, based on patterns it recognizes from vast data sets it’s trained on. For instance, in the realm of design, my 13 year old nephew is able to add/remove objects from a photo without any training in Photoshop or such tools. In businesses, non-English speaking businesses people are able to draft emails and responses true to their emotions. In large organizations, important meetings that decide the course of their companies future are being transcribed in real time and then stored to be queried by all employees, with access, to avoid any kind of ambiguity on the mission statement of the founders/CEO/management. The democratizing power of AI lies in its accessibility. As tools and platforms incorporating AI become more available, even small businesses or individuals can harness the power of quantitative analysis that was once the domain of large corporations or specialized researchers. Think of a local retailer predicting inventory needs using AI or an independent artist utilizing AI to optimize

Imagine limiting our understanding of electricity to just a toaster or a refrigerator

The tale I am about to narrate began on a day brimming with the usual hum of creativity at the co-working space we meet. It was here that Sumit and I found ourselves weaving an itinerary so personalized to my needs that what transpired in those seven minutes blew both our minds. Each minor tweak in the input led to the Large Language Model (LLM) further refining the itinerary, outclassing even what a professional had drafted. That moment was nothing short of an epiphany, marking the true birth of mPrompto in our minds. How it began Our venture into the world of AI was not a sudden leap, but a gradual immersion. The seeds of LLM were sown during our introduction to ChatGPT, and further nurtured after experiencing the new way AI aided browsing using Microsoft Edge’s chat feature. Then the whole story took a significant turn when Sumit, mPrompto’s co-founder, visited the Microsoft office in January 2023. He came back with treasure trove of experience and an insight that we both agreed as our future path. That visit plunged us both into an exhilarating journey of understanding LLM’s and their capabilities, eventually leading us to mould a B2B friendly application philosophy rooted in this wondrous technology. Every day since that of epiphany has been a saga of relentless learning and applying those learnings in the world of AI. There hasn’t been a single day when we haven’t learnt something new, applied our learnings, or admired an absolute paradigm changing application from our contemporaries in the realm of generative AI. Andrew Ng’s metaphor of AI as electricity further ignited a profound revelation in our minds. As we began to view the world through this new prism, the monumental shifts AI is catalysing in every sphere of life became clearly evident. However, we also identified a glaring lacuna in the common understanding of AI. Much like the comprehension of electricity transcends beyond merely using appliances, grasping AI’s potential extends far beyond its current applications. Imagine if we only saw electricity through the lens of gadgets like toasters or refrigerators. That limited view might have hidden the amazing possibilities that electricity has brought into our lives. Analogously, by understanding AI solely through the lens of its applications, we risk a myopic understanding that hides its true transformative potential. The applications are but the tip of the iceberg, a mere glimpse into the vast expanse of possibilities that AI holds. AI, like electricity, has the potential to reshape our world and lead us into an exciting future full of new possibilities. Path ahead As our voyage progressed, Sumit and I naturally veered towards a vertical focused on augmenting customer interactions and bridging the gap between the vision of senior management and the execution by employees in a B2B setup. The world of AI unfurled a plethora of use cases before us, ranging from Predictive Analytics to Enhanced CRM systems, Automated Customer Support, and beyond. Although we embarked on a specific vertical, our vision is not bounded by just that, we continue with zeal to explore further. While we’ve articulated our strategic roadmap in our recently published research paper, we’re committed to maintaining a jargon-free dialogue around AI. We’ve initiated a proprietary tool-building process (currently under patent) that we believe will significantly set us apart from our peers. A cornerstone of our insights throughout this journey has been the notion of ‘Responsible Human in the Loop’, which we see as the bedrock of a resilient AI system in a B2B environment. The harmonious blend of human insight with AI’s analytical might is guiding us on a path towards operational superiority. This core philosophy is intricately woven into the fabric of all the products we’ve developed. As I reflect upon our journey, a profound sense of gratitude envelops me towards the tireless AI researchers and leaders. The endeavours of stalwarts in generative AI like Anthropic and Open AI are the guiding stars in our voyage. The tools and knowledge they provide serve as catalysts, propelling our journey into the captivating realm of AI. Sumit and I are like streams in the vast ocean of dreamers and doers, standing at the threshold of a revolution, flowing with the current. As we delve deeper, we realize that the narrative of mPrompto is just one thread in the expansive and intricate tapestry of AI’s impact on the business domain and beyond. With this in mind, my intention is to initiate a series of dialogues with you, the reader, where I aim to illuminate various AI concepts, delve into some fascinating applications within this multi-modal generative AI domain, and also provide a sneak peek into the exciting projects we are engrossed in. Author – Ketan Kasabe, Co-founder: mPrompto